HBase 超大表修改导致的RIT问题

问题描述

集群需要更新Table Version

1 | echo " disable 'xxxx_jdid_new' " | hbase shell -n |

但是由于table 数据量非常大(100T+) , 此操作直接导致table 出现大量RIT

tool

1 | wget https://dlcdn.apache.org/hbase/hbase-operator-tools-1.2.0/hbase-operator-tools-1.2.0-bin.tar.gz |

解决 RIT blocking

HMaster 日志:

1 | 2023-03-01 21:04:02,810 WARN [ProcExecTimeout] assignment.AssignmentManager: STUCK Region-In-Transition rit=OPENING, location=idc-bj-hbase01-node198.hostname.com,16020,1657113851089, table=xxxxx_ip_new, region=83504493dad030dd984351d836f1e038 |

Region 操作:

1 | 对Region 执行 assign |

Region 恢复正常,可以用canary 或者 Get 测试表,是否完全恢复正常。

RegionServer 出现 HFile异常:

1 | 2023-03-01 20:13:46,242 WARN [region-location-2] balancer.RegionLocationFinder: IOException during HDFSBlocksDistribution computation. for region = e6f6b7e51ece844ebb25346ee1d3e424 |

使用 filesystem --fix 修复以上问题

1 | hbase hbck -j ~ s/hbase-operator-tools-1.2.0/hbase-hbck2/hbase-hbck2-1.2.0.jar filesystem --fix app_id_v2 |

执行后问题若还是无法修复,可以将对应Region 下的引用文件进行强制删除:

1 | 假设 geo_ip_new 表, Region: ba3a916a55749216a59a923816819058 出现问题 |

之后再对Region 执行 assign 操作

1 | hbase hbck -j ~ s/hbase-operator-tools-1.2.0/hbase-hbck2/hbase-hbck2-1.2.0.jar assign -o ba3a916a55749216a59a923816819058 |

canary 遇到region not online问题,也可以使用assign 工具解决

1 | ERROR: org.apache.hadoop.hbase.NotServingRegionException: app_ip_new,0562439629376612551|2020022513,1654594690934.513c23e655b4fb5ff72049f79f8d0a13. is not online on idc01-hbase01-node188.hostname.com,16020,1657111672521 |

高级用法

对处于CLOSED状态的Region 进行批量 assigin

1 | echo " scan 'hbase:meta', { COLUMN => 'info:state'}" | hbase shell > tmp_txt_p7.txt \ |

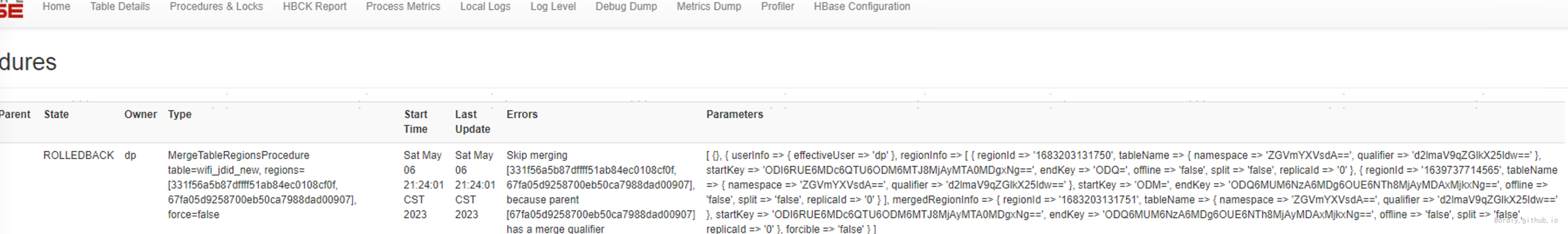

lock

lock 解决

1 | hbase --config ../data-sync-dispatcher-nj-ods-2-tx-emr/config/emr-ssd-hbase hbck -j ~/hbase-operator-tools-1.2.0/hbase-hbck2/hbase-hbck2-1.2.0.jar bypass -r 2277 |

总结

大表进行DDL修改是个灾难。

参考

- Title: HBase 超大表修改导致的RIT问题

- Author: Ordiy

- Created at : 2023-05-07 14:48:25

- Updated at : 2025-03-26 09:39:38

- Link: https://ordiy.github.io/posts/2023-01-01-hbase2-fix-rit/

- License: This work is licensed under CC BY 4.0.