HBase Peer状态异常导致的oldWALs目录文件数异常

背景简述

1 | HBase Version 1.0.0-CDH5.5 |

该集群作为主集群,配置了一个Peer集群,但Peer集群下线后已经进行了disable_peer。

事故异常过程

HBase RegionServer 突发异常,并出现了宕机

RegionServer 无法处理流量导致 RPC Read/Write流量异常:

对RegionServer 进行重启失败,出现异常:

1 | Feb 22, 12:01:46.497 PM WARN org.apache.hadoop.hbase.coordination.SplitLogManagerCoordination |

定位问题

从日志提示看是由于/hbase/oldWALs 目录下的文件数超过了HDFS最大文件限制数10485761,检查该目录:

1 | hdfs -dfs -du -s /hbase/oldWALs |

这个文件达到了100T, 已经无法统计出该目录下的子文件。目录/hbase/oldWALs的作用是WAL的归档目录,一旦一个WAL文件中记录的所有KV数据确认已经从MemStore持久化到HFile,那么该WAL文件就会被移到该目录。 开启了Peer后,若未复制成功的WAL也会存放在该目录。

解决问题

清理/hbase/oldWALs

1 | today=`date +'%s'` |

数据

原因

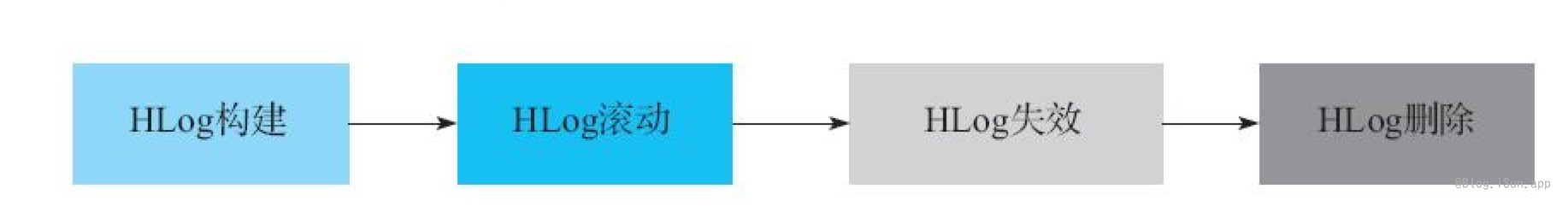

HLog文件是有生命周期的,HLog生命周期:

默认情况下HBase Master(Active节点)会后台启动一个线程,以hbase.master.cleaner.interval(默认1分钟,HDP3 改为了1h间隔)为间隔检查oldWALs下所有失效的日志问题,确定是否可以删除。

确认删除需要达成2个条件:

- HLog 文件是否还在参与主从复制,以及该文件是否还在用于主从复制

ReplicationHFileCleaner.getDeletableFiles逻辑 - HLog 文件在oldWALs文件中存在的时间 >

hbase.master.logcleaner.ttl(默认值10minutes)

1 | [zk: localhost:2181(CONNECTED) 6] ls /hbase/replication/peers |

参考:

- Title: HBase Peer状态异常导致的oldWALs目录文件数异常

- Author: Ordiy

- Created at : 2022-02-24 17:05:36

- Updated at : 2025-03-26 09:39:38

- Link: https://ordiy.github.io/posts/2022-02-21-hbase-region-server-error-md/

- License: This work is licensed under CC BY 4.0.